09-23-2024

09-23-2024

Key Takeaways:

- Federal agencies need to comply with OMB Executive Memorandum M-24-10. With the right approach, they can establish even stronger AI governance and risk management practices.

- SAIC developed a holistic AI governance framework for our organization that is applicable to federal agencies and can help them balance innovation with the public interest.

- The AI governance dialogue will continue, and federal agencies should expect to adapt their approaches to the continually evolving AI landscape.

LISTEN TO THIS FEATURE:

OMB Executive Memorandum M-24-10 outlines new AI governance, innovation and risk management standards.

Data is a mission asset. It can help federal agencies deliver services more effectively, meeting the public’s needs while optimizing operations. Yet outdated IT infrastructure is a massive roadblock. With CIOs and CAIOs so focused on modernizing in place to address the data infrastructure, it is important that AI governance is not overlooked. In fact, given a December deadline to comply with OMB Executive Memorandum M-24-10, it should be a top priority. As such, here are five issues for agency leaders to think about as they tackle AI governance.

AI for data and data for AI

Click image to enlarge

Here’s the thing about AI governance. Data needs AI to unlock its full value. And AI needs data to unlock its full value—along with guardrails to ensure it is used responsibly. This symbiotic relationship between data and AI means that there is no becoming a data powerhouse today without AI governance.

Federal CIOs and CAIOs are responsible for harnessing the potential of AI while managing its risks and complexities. This is a difficult balance to strike. AI technologies are paradigm shifting and advancing fast, offering new ways for agencies to achieve mission success.

In addition, so much is at stake for agencies if they don’t use AI ethically and responsibly. The OMB Executive Memo provides guidance to federal agencies about how to effectively plan for and deploy trustworthy AI systems, as directed by Executive Order 14110. The OMB guidance is welcome, but knowing how best to apply it within each agency will be challenging.

Achieve compliance—and more

The direction given in the Executive Memorandum creates an opportunity for CIOs and CAIOs to establish a stronger AI governance and risk management foundation by integrating best practices from other established standards.

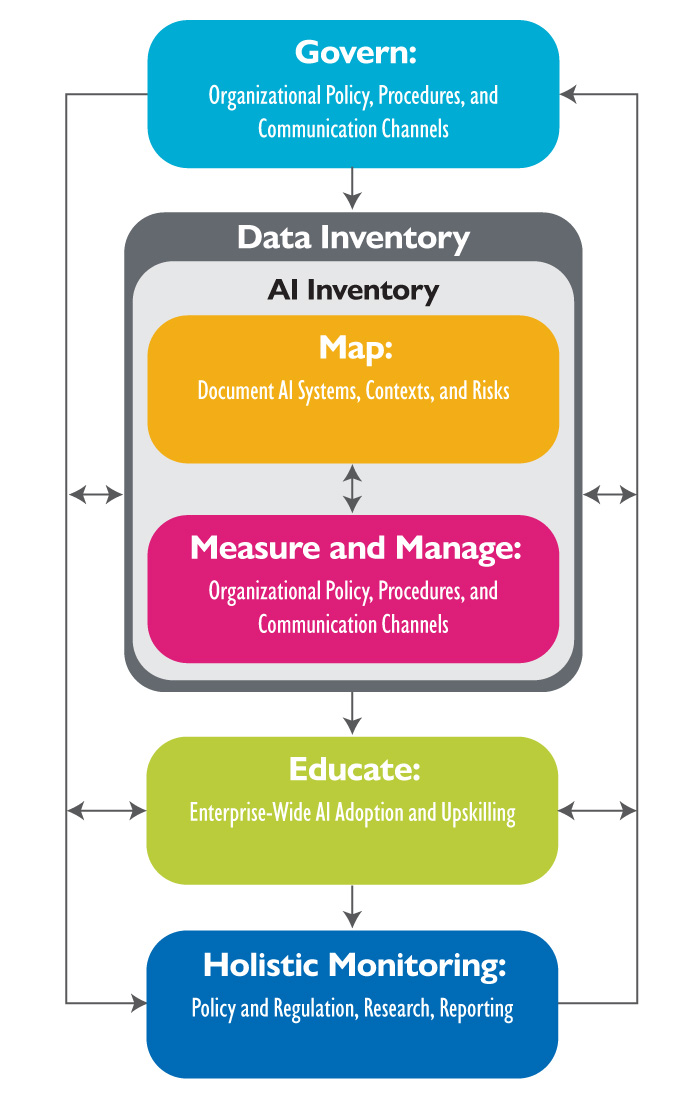

We used this value-added approach at SAIC to model a holistic AI governance framework for our organization. It’s applicable to federal agencies as well—a way to exceed the mandate by balancing innovation with the public interest. While there is no one-size-fits-all AI governance and risk management approach, the fundamentals of this model are grounded in five initiatives.

1. Establish a cross-functional AI governance board

AI risk management requires more collaboration than traditional cyber risk management. Instead of solely protecting systems and data from unauthorized access, AI risk management must also consider how algorithms process and interpret human-generated data to make decisions, predictions or automate tasks. These capabilities can also create risks such as data bias or fairness and privacy concerns related to AI misinterpreting or misusing this human data.

Creating an AI governance board is a critical first step in managing such complex risk dynamics. For one, enterprise AI delivers benefits across the agency. The more centralized the data is, the more potential benefits there are. And ubiquitous AI adoption requires that all stakeholders accept AI governance. With an AI governance board, you can ensure that governance is informed by perspectives from across the agency.

What the best AI governance boards do:

Codify and communicate formal intake processes.

Create a plain-language AI glossary.

Educate the workforce on compliance guardrails.

Create a flexible governance model.

The AI governance board’s primary focus is to inform, evaluate and drive policies, practices, processes and communications channels related to AI risk. Boards help make the most of the AI opportunity within the context of the mission, building trust and transparency with the workforce, stakeholders and the public. Over time, they foster a common culture of informed, proactive AI risk management.

2. Maintain an inventory of AI use cases and solutions

The executive order requires agencies to inventory AI use cases at least annually, submit them to OMB and post them on the agency website for full transparency.

The most comprehensive inventories account for AI development projects, procured AI systems, AI-related R&D, data and information types and AI actors. They also include project and development status, developer information, use case summary and context details, data sources, security classification of information, AI techniques used and responsible point of contact. The AI inventory requirements are also likely to regularly refresh over time, as we are already seeing efforts by lawmakers and the White House to revise reporting requirements with the goal of promoting greater transparency.

Conducting these inventories is typically time-consuming because there are many elements to log for each inventory item. While agencies can conduct effective inventories using spreadsheets, automation streamlines this process. AI development platforms like SAIC’s no-code and low-code Tenjin are effective tools for managing AI assets. The added transparency meets a compliance mandate and provides the benefit of allowing CAIOs to assess the impacts of AI on mission delivery. Users do not have to be skilled data scientists, analysts or engineers to use Tenjin.

Tenjin’s governance capabilities further enable enterprise-grade governance and AI portfolio oversight with standardized project plans, risk and value assessments, a centralized AI inventory and model registry, as well as workflow management where users can document reviews and sign off on assessments. This makes it a vital toolkit for federal agencies working to comply with requirements outlined in the Executive Memorandum.

3. Map, measure and mitigate AI risk

Safety-impacting AI has the potential to significantly impact the safety of human well-being, environmental safety, critical infrastructure, etc.

Rights-impacting AI serves as a principal basis for a decision or action concerning a specific individual or entity’s civil rights, right to privacy, access to critical resources, equal access to opportunities, etc.

Source: OMB Executive Memo M-24-10

With a completed inventory, the next step is to map the different levels of risk associated with each inventory item. This is key to help you address the highest-risk AI use cases first.

The Executive Memorandum requires context-based and sociotechnical categorization of AI as part of this mapping. As defined by OMB, there is safety-impacting AI and rights-impacting AI. Safety-impacting AI has physical and material impact while rights-impacting AI affects personal liberties and justice. Sometimes, the impacted safety and rights become known only afterward. But there is a lot that agencies can do to assess the potential effects of AI ahead of its deployment. A good AI governance structure has provisions for monitoring AI algorithms in development and operation.

By taking mapping further, you can get an even more comprehensive understanding of risk. Consider using Federal Information Processing Standards Publication 199 (FIPS-199), which provides a standardized risk categorization method for information and information systems. In addition, NIST’s AI Risk Management Information Framework and accompanying standards provide best practices for assessing and mitigating identified AI risk. Looking ahead, several pieces of pending legislation in Congress point to the NIST framework as lawmakers seek to enact risk management requirements. Again, AI-powered tools like Tenjin can help you turn this risk mapping into an interactive management tool.

4. Grow AI literacy by educating the workforce

Although the Executive Memorandum does not expressly stipulate any requirements for educating the workforce in AI, it does require agency AI strategies to include an assessment of the agency’s AI workforce capacity and projected needs, as well as a plan to recruit, hire, train, retain and empower AI practitioners and achieve AI literacy for non-practitioners. Workforce education is a critical success factor that should be a part of any AI governance approach.

For one, most of the subject matter experts who will participate in the AI governance board, such as ethics officers, legal counsel, diversity and inclusion representatives and human resources officers, may not be technical personnel by training. However, to contribute the most value to the board and the governance development process, they should have a foundational understanding of AI technologies.

This understanding is equally important for the entire workforce. AI is a consequential technology. It will ultimately impact how work is done and how citizens are served across the federal sector. The more that employees know, the more they will appreciate the importance of complying with AI governance guardrails. Hands-on training and upskilling with a low-code, no-code AI platform like Tenjin that is accessible across skill levels is an excellent way to grow AI literacy across your workforce.

5. Continuously monitor the changing AI landscape

Proactive AI governance is never achieved in a single effort. By design, it should be iterative and evolving. This is possible through a combination of awareness and action.

Awareness is everyone’s responsibility. The AI governance board and senior leadership should track the regulatory, policy, research and technological landscapes to determine if (and how) changes impact AI governance. Employees can track governance gaps from a more pragmatic, day-to-day perspective. For example, people working in departments that handle personal health, financial or demographic information can monitor how AI systems use and protect this data. Or employees in communications can monitor citizen feedback about AI-powered services to improve governance and transparency.

To be able to pivot fast, AI governance boards should develop protocols and policies early on for how to quickly update governance, communicate changes and flex organizational structures and ways of working. Through all of this, lines of communication should be bidirectional, flowing seamlessly from senior leadership to employees and vice versa.

While there is a pending deadline to comply with the OMB Executive Memorandum, the AI governance dialogue should be ongoing and progressive in response to the ever-evolving AI landscape. There is no reason to wait to pursue AI governance. By approaching the Executive Memorandum more holistically, you can go beyond complying with the mandate. You can exceed it while building the foundation your agency needs to turn data into a mission asset.

Learn more

To learn more about how to meet the OMB guidance and make AI governance a priority in your agency, contact Srini Attili.

Learn more about SAIC's federal civilian solutions